Virtual threads in Java 21

Virtual threads in Java 21

Virtual threads are a technology that has been in development for many years, and the Loom project has been working for about 10 years to provide support for virtual threads in Java. Finally, Java 21 introduced the ready-to-use implementation of the concept.

Why do we need virtual threads?

Most business applications are I/O bound. Unlike CPU-bound applications, which are limited by the speed of the processor, I/O-bound applications are constrained by Input/Output operations, such as disk reading/writing or network communication.

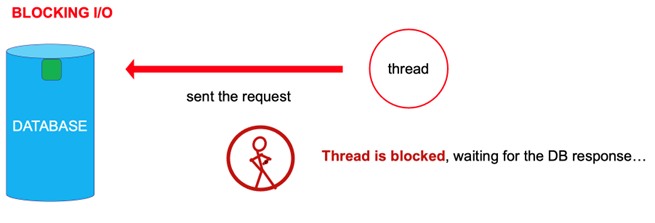

When we access the file system with InputStream/OutputStream, when we are working with the databases via JDBC, or using Spring Data or Hibernate (built on top of JDBC), in all these cases we are using blocking input-output. It means that when the thread sends the request to the database it does not go to the next line of the code until receiving the response. It is waiting, it is blocked.

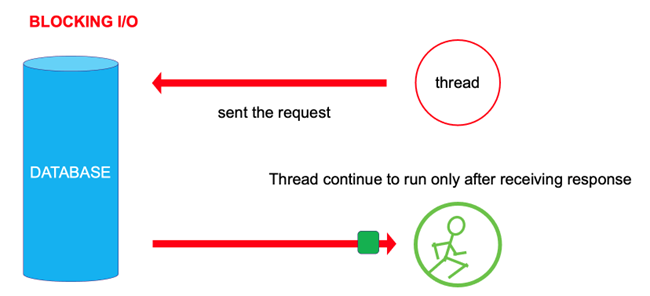

When the thread is blocked, it is doing nothing. It doesn't consume the CPU. It doesn’t serve the clients. It is just blocked. Only when the response is received, the thread is unblocked and able to process other requests from the users:

As a result, we need to create many more threads than CPU cores. Most of these threads are blocked not because they are consuming CPU — they are just waiting for the I/O response.

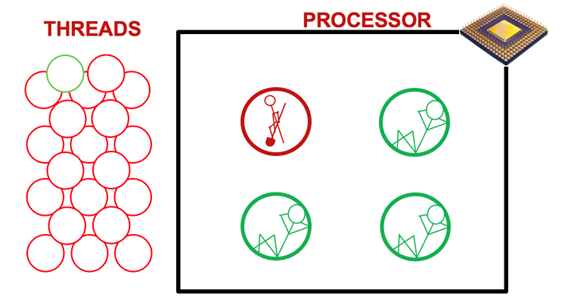

As you can see in the illustration below, CPU cores are underutilized, but the number of threads is very high — most of them are blocked waiting for an I/O response:

In theory, we should have as many threads as we have CPU cores for the most efficient work. But in reality, we are forced to have a lot more threads because many of them are blocked. And we are forced to create a lot more threads. Managing this large number of threads is quite expensive. Creating threads takes time. Switching between the threads has a significant overhead (and we need to switch very frequently, having much fewer CPU cores than threads). And every thread takes a lot of RAM.

The CPU is forced to switch between threads like a juggler throwing balls. To improve the situation, we can use non-blocking input-output together with asynchronous or reactive programming. We will compare these approaches at the end of the article, but first, let’s discuss the concept and benefits of virtual threads.

How do virtual threads work?

What are the benefits of virtual threads? First, they can be started very quickly. It takes just one microsecond compared to one millisecond for a regular thread. Also, it requires much, much less memory, and switch overhead is minimal.

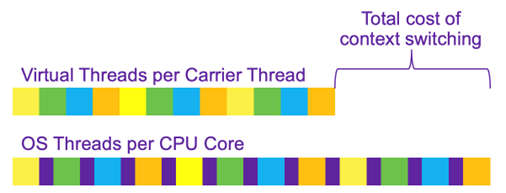

How do they work? Many virtual threads may be reusing the same operating system thread. An operating system thread, which is named a carrier thread, can handle a large number of virtual threads.

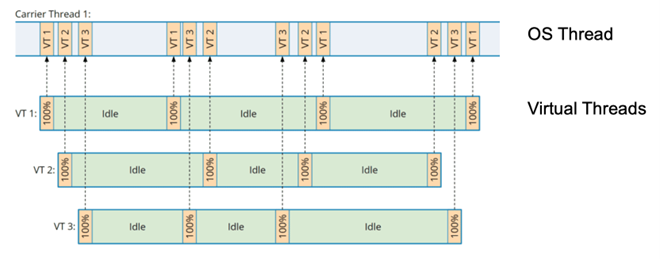

Let’s take a look at the diagram:

A carrier thread can be thought of as a regular OS thread. To minimize switch context overhead, usually, we should have as many carrier threads as CPU cores.

The carrier thread executes runnable tasks. These tasks are the virtual threads (VT1, VT2, VT3), which are lightweight threads managed by the JVM rather than the operating system. Instead of switching OS threads competing for a CPU core, the JVM can switch between virtual threads competing for the same carrier thread. Why? This requires much less overhead.

Virtual threads can be in different states:

- Runnable: The virtual thread is actively executing on the carrier thread.

- Waiting: The virtual thread is not currently executing because it's waiting for some condition to be met or for some I/O operation to complete.

- Blocked: The virtual thread is prevented from running due to a lock or monitor that it's trying to acquire but can't because another thread holds the lock.

When a virtual thread is waiting or blocked, the carrier thread can pick up another virtual thread and start running it. This is an efficient use of the carrier threads and allows for high concurrency, especially in I/O-bound applications.

The key takeaways are:

- Virtual threads are managed by the JVM and are mapped onto a smaller number of carrier threads.

- Carrier threads can execute tasks from different virtual threads, switching between them as needed.

- This model allows a large number of virtual threads to be used efficiently, even if the number of physical or carrier threads is much smaller, thereby improving application scalability and performance in I/O-bound operations.

The main benefits of virtual threads are:

- Very fast start (1ms for regular threads vs 1μs for virtual)

- Minimal memory usage

- Minimal context switch overhead

We can create millions of virtual threads — and use the approach thread per every task. When using blocking I/O, most of our threads will be blocked — but it’s not a problem anymore, we can create as many threads as we need, with no worries.

Virtual threads versus reactive programming

But the next thing to consider is comparing this approach with reactive programming, a very sensational paradigm in a Java world. Reactive programming allows us to achieve about double the performance on a high load compared to regular threads and thread pools. But it comes at the price of a much more complicated code.

When the performance of virtual threads is compared to reactive programming, reactivity is still ahead, but the difference is not so significant. It could be 10-20% faster, and only on the high load. In all other cases, performance is similar, with a much simpler programming model. Simplicity is a number one priority for the development of contemporary over-complicated and huge systems. In contemporary systems, scalability and supportability are much more important than the high performance of a single instance.

Will virtual threads kill reactive programming? Brian Goetz, architect for the Java language in Oracle and the main trendsetter of contemporary Java, said in a recent interview:

“We are going discover that reactive is a transitional technology. We are going to discover that we thought we liked reactive but actually, it sucks. And that it was just one of those [where] the alternative was worse, so it seemed good.”

However, considering all the money that was invested into the reactive approach, it will not die soon. Reactivity is still more performant on high loads, has a bunch of great tools, like the reactor framework in Spring, and supports more complicated scenarios, like the built-in support of backpressure.

But with virtual threads, you can efficiently use blocking I/O, which is much easier to work with, more intuitive, supported by many mature libraries like Hibernate, and is still much more common than the reactive approach.

At the Luxoft Training Center, we provide a variety of advanced trainings in Java, including the concepts of reactivity and virtual threads. We demonstrate use cases, measure performance, and analyze the advantages and disadvantages of every technology. Each training is enriched with illustrative material and a large number of code examples. If you are an experienced Java developer interested in further development and success in the highly competitive market, we recommend our intensive courses, where, in a very short amount of time, you will gain a deep understanding of modern approaches.

For those eager to elevate their Java skills, our upcoming Java courses offer an unparalleled opportunity to learn from industry experts. Delve into our comprehensive curriculum designed for both beginners and seasoned programmers at https://luxoft-training.com/schedule, where you'll find a schedule tailored to foster your development in this robust programming language. Whether you're looking to understand the basics or master advanced concepts, our courses are structured to provide you with the tools necessary for professional growth and success in the Java ecosystem.

Author:

Vladimir Sonkin

Lead teacher, Java expert